Huffman Codes

Contents

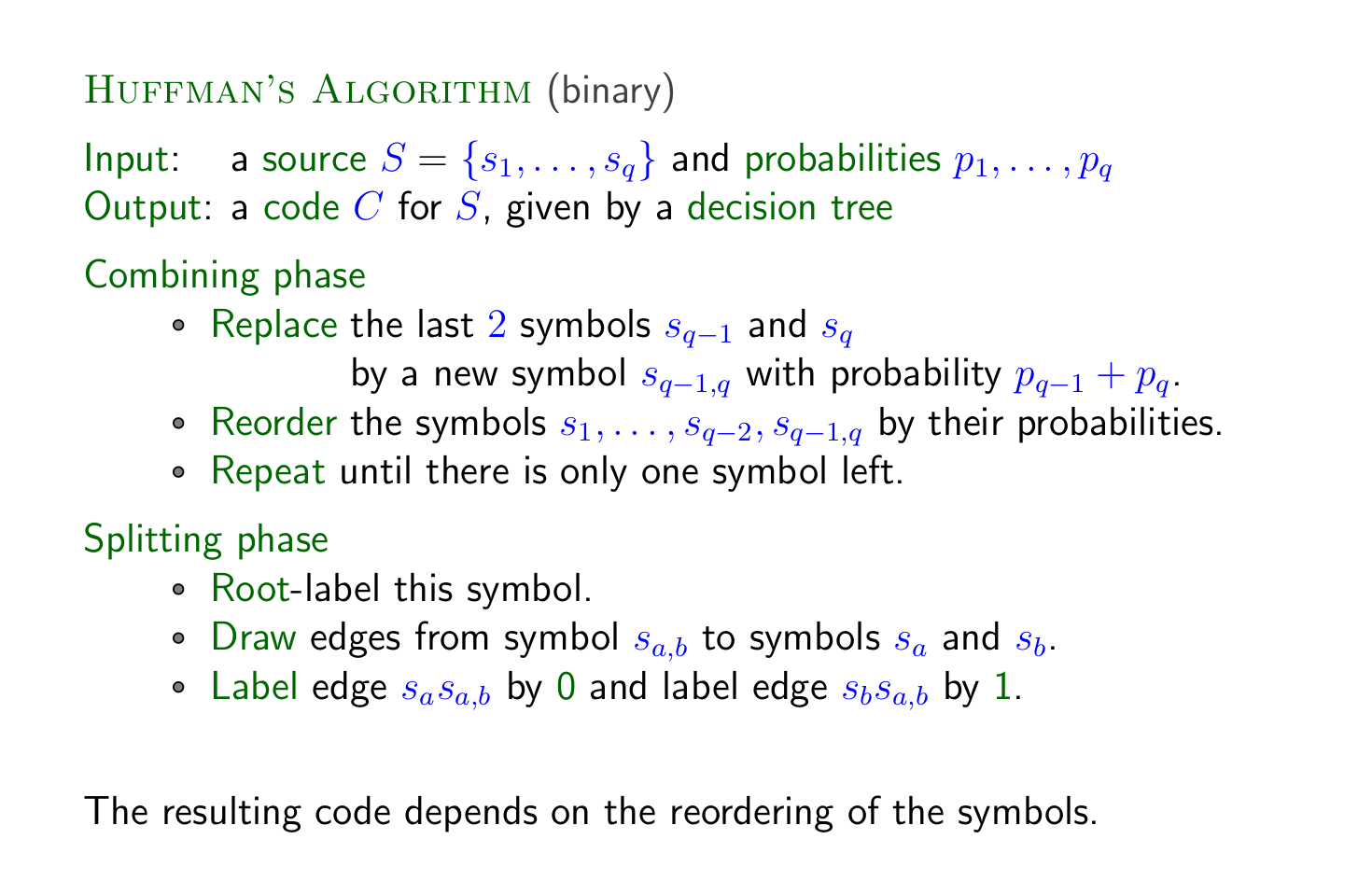

Huffman’s Algorithm

The Huffman coding scheme generates the best possible uniquely decodable code.

- All probabilities have to be known before any codeword can be found

There is no formula for the length of the codewords

Need to have

nelements such thatn mod (r-1) === 1

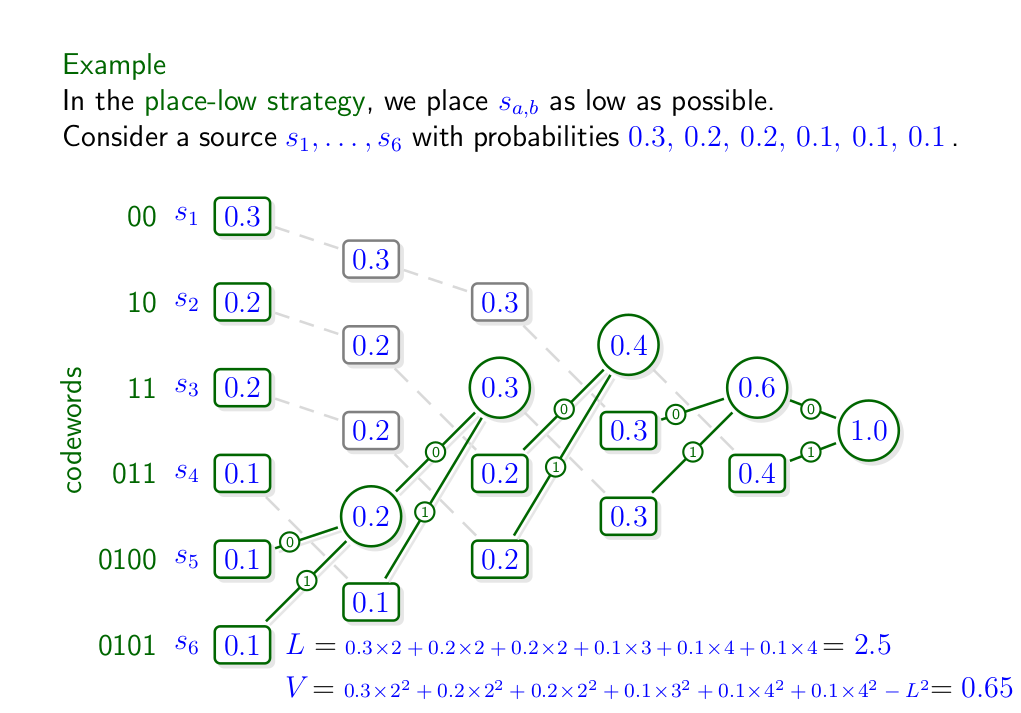

Place-low Strategy

Place $s_{a,b}$ as low as possible

- Combine the two codewords with the lowest probabilities

- Sort the probabilities, placing the combined probability as low as possible

- Repeat

- Then label top-down $0, 1, …, n$

Then trace the tree from the paths

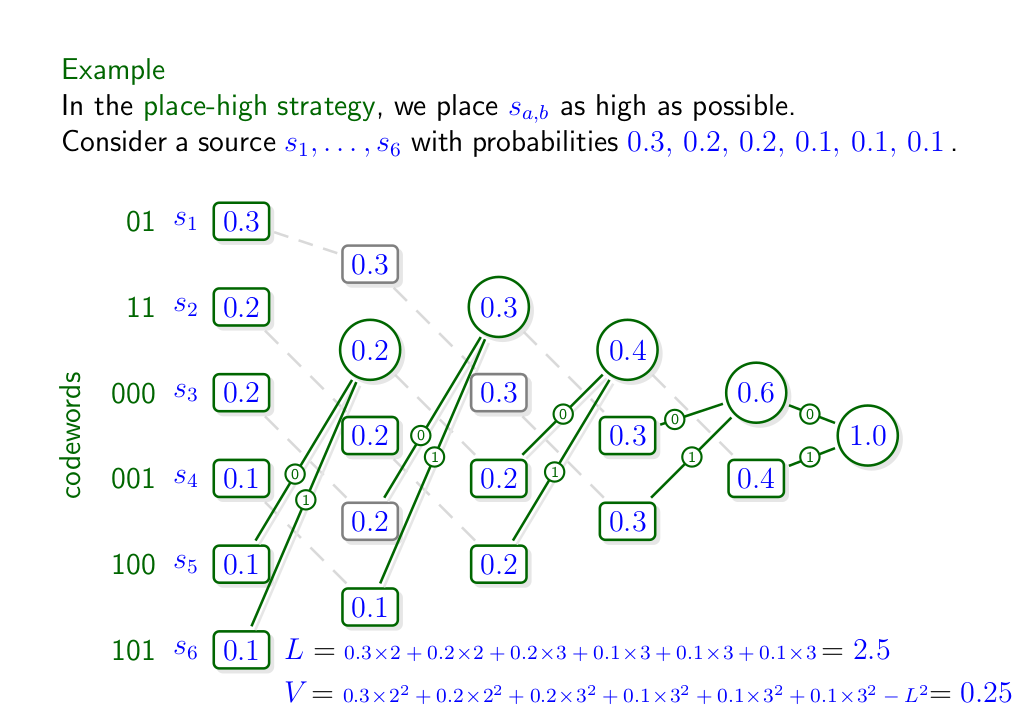

Place-high Strategy

Place $s_{a,b}$ has high as possible.

- Combine the two codewords with the lowest probabilities

- Sort the probabilities, placing the combined probability as high as possible

- Repeat

- Then label top-down $0, 1, …, n$

Average Length

The usual method to calculate the average length is to sum the product of each codeword’s length with its probability.

Knuth’s Theorem

Knuth’s theorem allows us to take a short-cut in finding the average length.

The average codeword length $L$ of each Huffman code is the sum of all child node probabilities / combined nodes