Seminar: Neural Network Accelerators

Contents

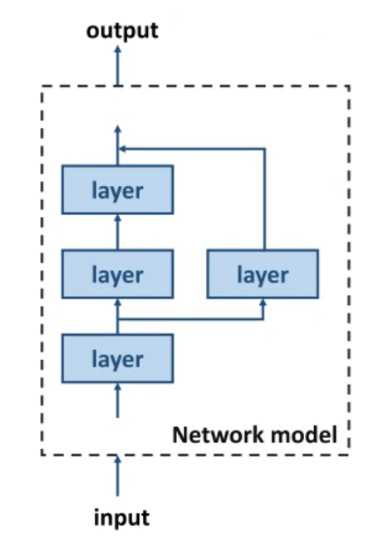

Layers

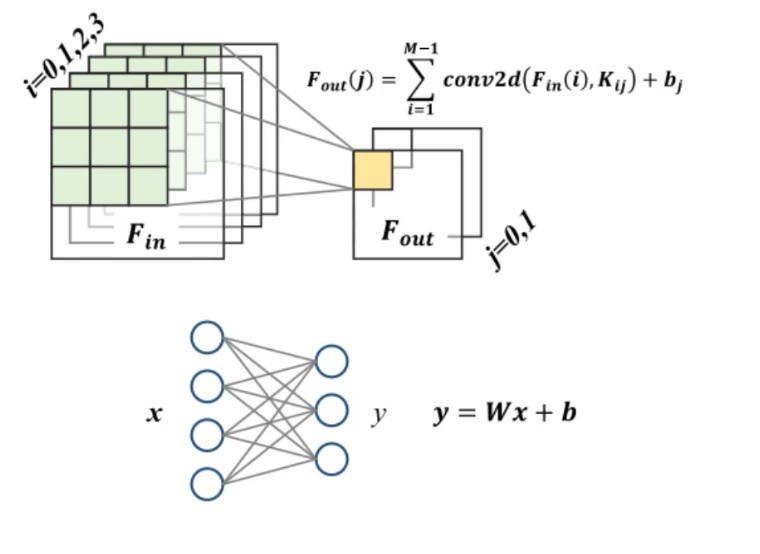

Convolutional Layer

- Main building block

- Contains a sets of filters (mathematical kernels), whose parameters are learned throughout the training

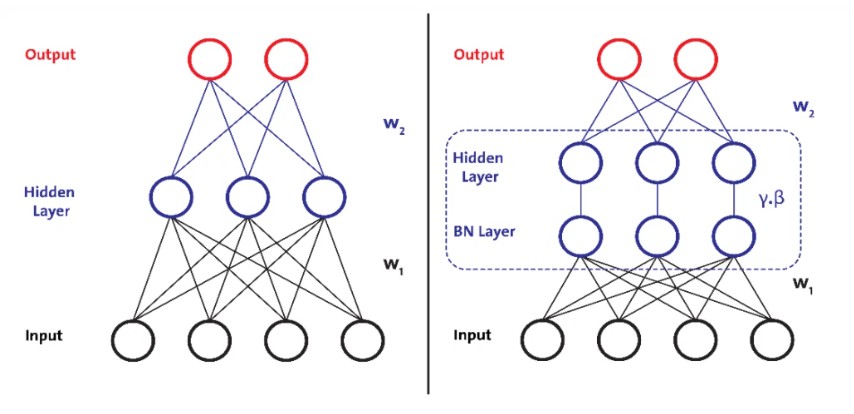

Normalisation Layer

- Normalises the output, to avoid overfitting

- Prevents small changes of parameters amplifying

Pooling Layer

- Sliding 2D filter that summarises the features within a region

- Reduces the dimensions of a feature map

Fully Connected Layer

Applications

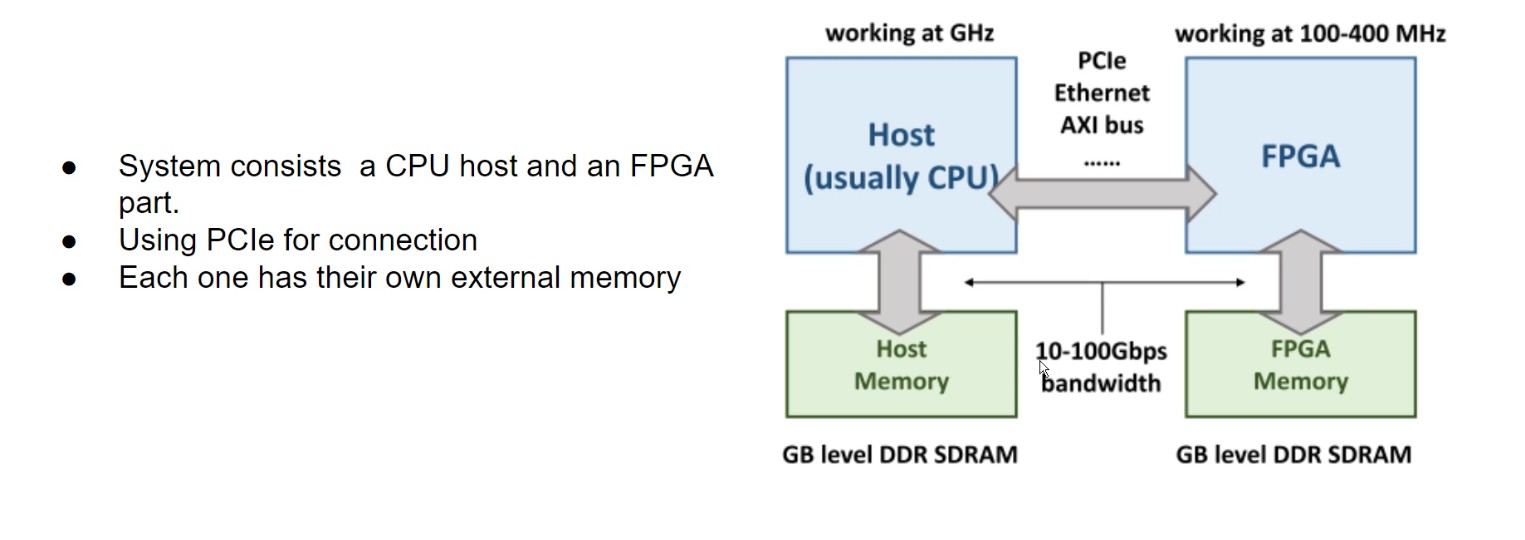

Neural networks are CPU-heavy, so an FPGA designed implementation is cost effective

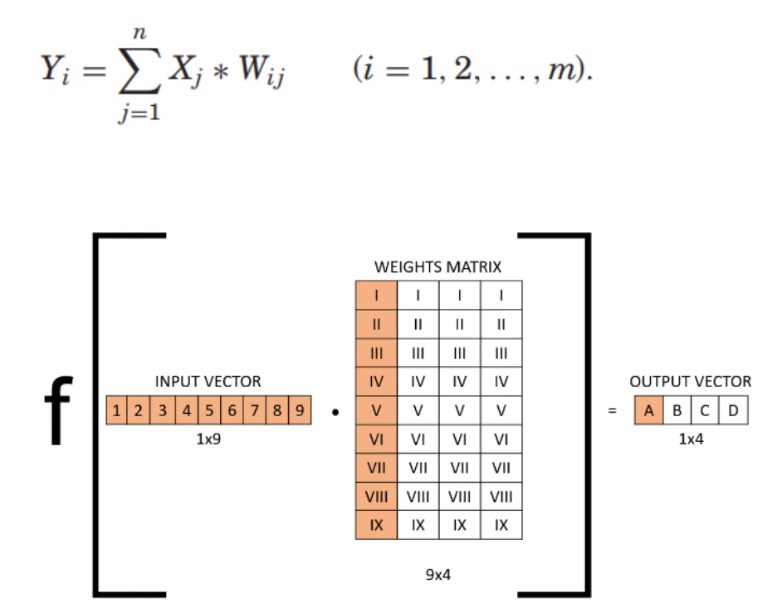

Maths

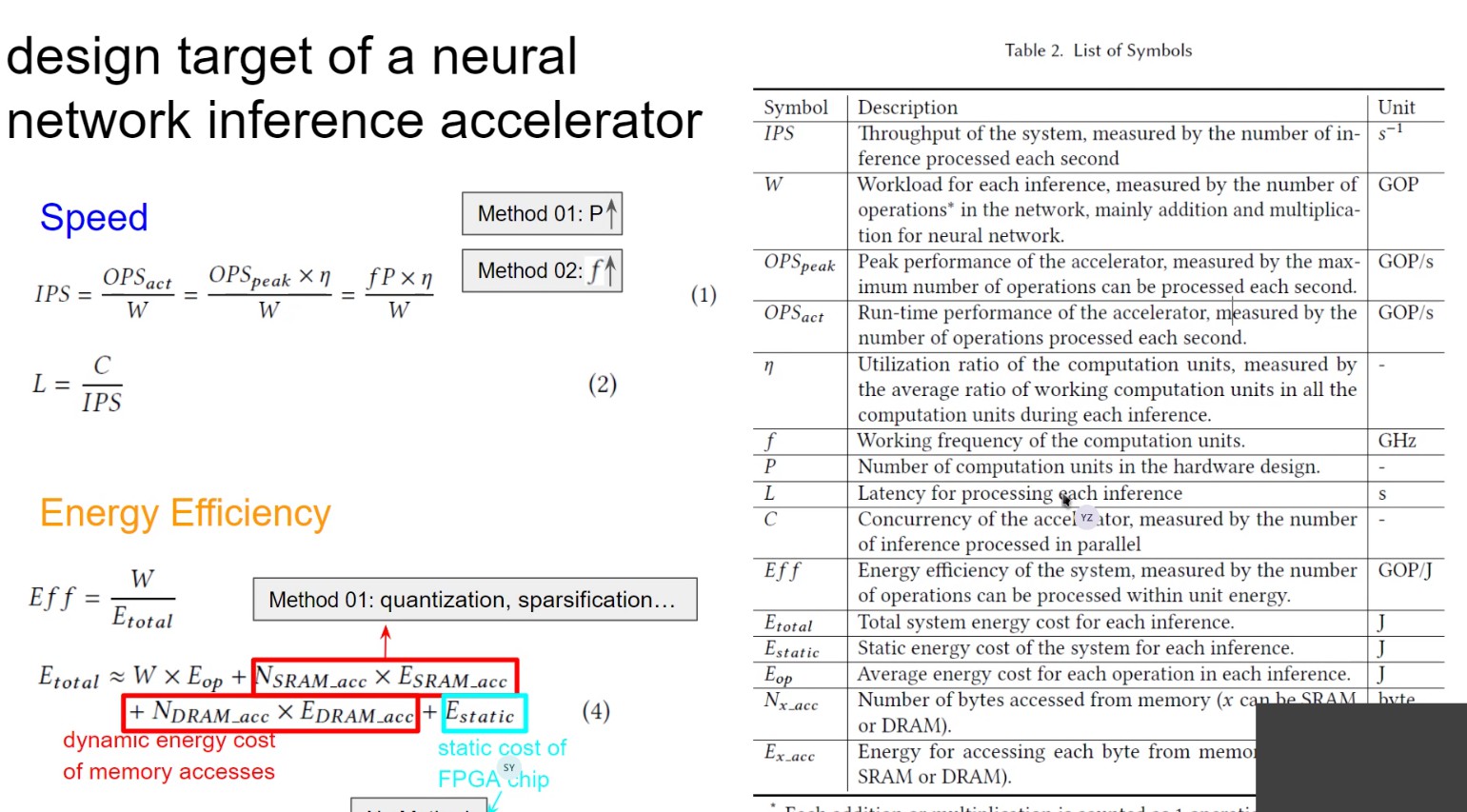

Throughput (IPS) = (Number of units * frequency * utilisation ratio) / work

Latency = Concurrency / throughput

- Can increase performance by decreasing accuracy

- Increase energy efficiency?

Model Compression Methods

Data Quantization

Convert values into quantised values

i.e. Changing a floating point value into an 8-bit integer

Linear Quantization

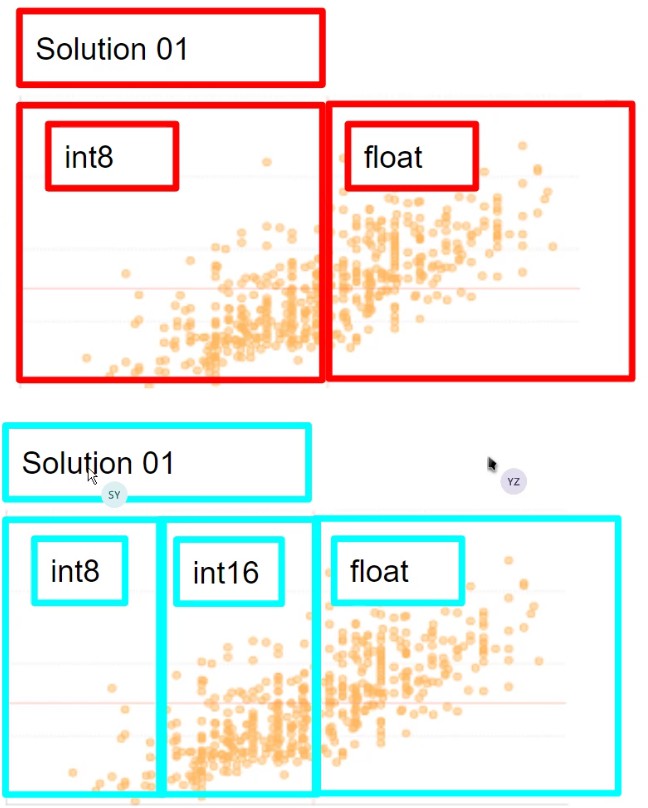

- Dynamic-precision data quantization

Different layers have different precision requirements

- Analyse data distribution

- Propose multiple solutions with different bit-width combinations

- Choose the best solution

- Hybrid Quantization

Keep the first and last layers as the same datatype

Intermediary layers change in type

Non-Linear Quantization

Lookup table / hashtable

Hardware Design - Acceleration

Computational Unit Level

e.g. Low bit-width, fast convolutional algorithm

Binarized Neural Network

- Turn values into binary

- Convolutional and FC layers can be expressed as binary operations - XnorPopcount

- Slight loss of accuracy

- Some techniques use a gain term to restore the accuracy

Factorised Dot Product

For non-linear quantisation, if the range of values is small, and the number of possible weights is less than the kernel size - then we can Add > Multiply instead of Multiply > Add

Fast Convolution Algorithms

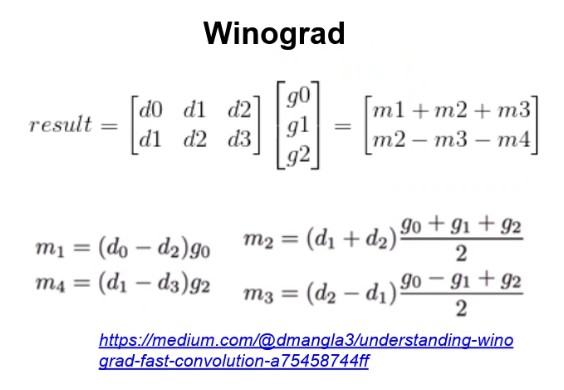

DFT, FFT, Winograd

Convolutions can be expressed as a matrix multiplication!

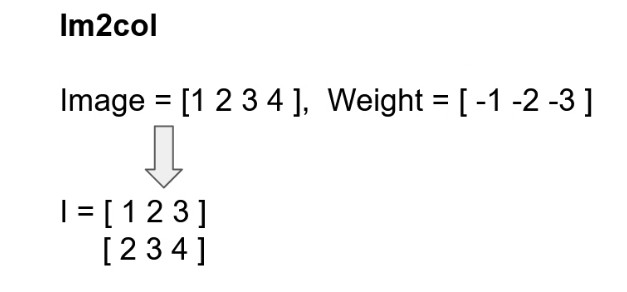

Image2Column - Convert convolution into a matrix multiplication

Winograd Multiplication - Fast multiplication algorithm

Winograd multiplication is not commonly implemented in an FPGA design as it has a higher resource requirement

Layer Level

Network structure optisations

e.g. Loop parameters, data transfer optimisations

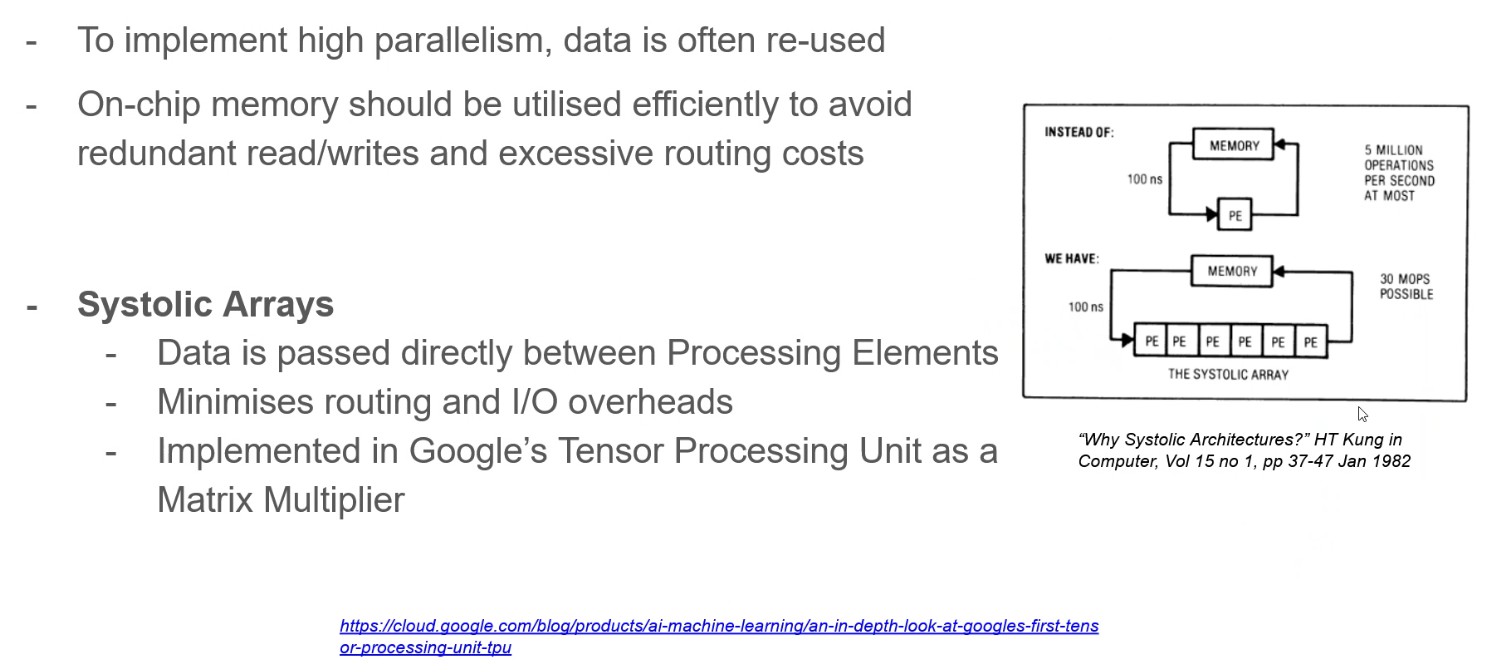

Systolic Array

Reuse data to achieve high parallelism.

System Design level

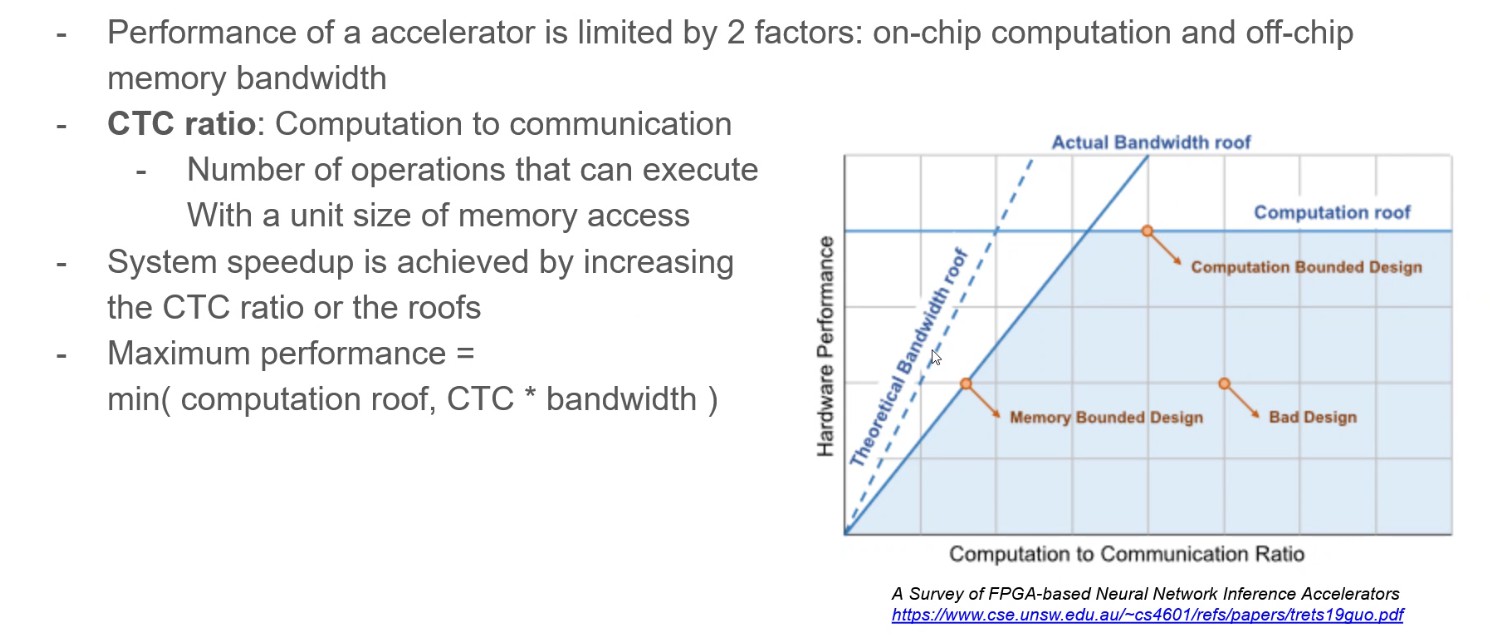

Roofline Model

Design efficiency can be expressed by its CTC ratio (computation to communication ratio)